Jensen Huang’s 25x Energy-Efficient Supercomputers: Status and Global Projects

Background on the 25x Claim

Nvidia CEO Jensen Huang has repeatedly stated that Nvidia’s latest accelerated computing platforms—specifically those built with the new Blackwell and Grace Hopper (GH200) architectures—can deliver up to 25 times greater energy efficiency for large AI workloads compared to traditional CPU-based systems or previous GPU generations161116. This is achieved by combining high-performance GPUs and CPUs, drastically increasing throughput while keeping power consumption relatively low.

Is Musk’s Colossus in Memphis the First 25x Supercomputer?

Colossus Overview

Elon Musk’s xAI Colossus supercomputer in Memphis is currently one of the world’s largest AI clusters, running on 200,000 Nvidia GPUs (initially H100, with plans to upgrade to Blackwell/GB200)712. The facility is designed for massive AI training and inference, and its scale and ambition are in line with the new generation of energy-efficient supercomputers.

Is It the First?

While Colossus is among the first and largest of the new wave of supercomputers leveraging Nvidia’s latest architectures, it is not the only one. The 25x efficiency claim specifically refers to systems built with Nvidia’s Blackwell and Grace Blackwell (GB200) chips, which are just beginning to roll out in 2025611. Colossus started with H100 GPUs, which are highly efficient but not the 25x Blackwell generation. However, upgrades to Blackwell-class chips are planned, and future phases of Colossus (including the planned "Colossus 2" with 1 million GPUs) may fully realize the 25x efficiency benchmark712.

Other 25x Supercomputers: Global Projects

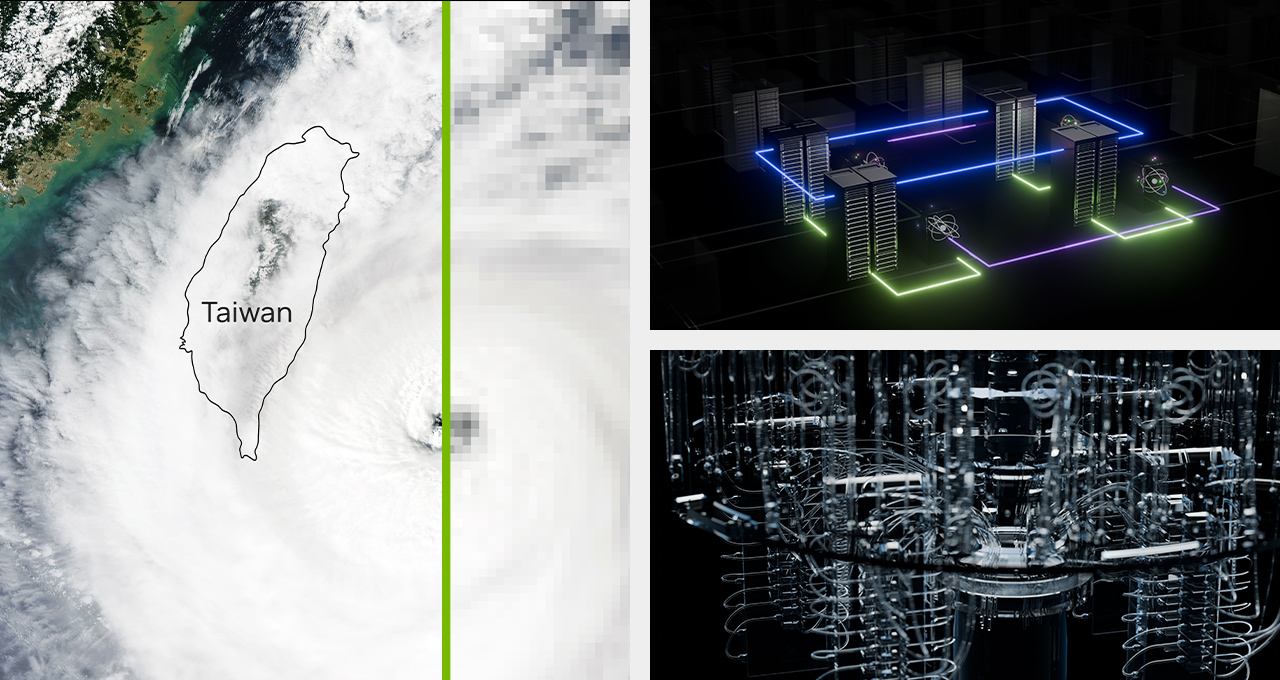

Taiwan: Foxconn, TSMC, and Nvidia AI Factory

Last month, Jensen Huang announced a partnership with Foxconn, TSMC, and the Taiwanese government to build Taiwan’s first “AI factory supercomputer,” featuring 10,000 Nvidia Blackwell GPUs381317. This system is explicitly designed to deliver the 25x energy efficiency promised by the new Blackwell architecture and will serve as a national AI infrastructure hub.

Japan: SoftBank’s Blackwell Supercomputer

Japan’s SoftBank Group, in partnership with Nvidia, is building the country’s most powerful AI supercomputer using Nvidia’s Blackwell chips51015. This was announced at the Nvidia AI Summit in Tokyo, where Jensen Huang and SoftBank founder Masayoshi Son discussed the transformative impact of AI and the importance of energy efficiency.

Europe and Beyond: Grace Hopper Supercomputers

Several research centers in Europe and elsewhere have deployed or are deploying supercomputers based on Nvidia’s Grace Hopper platform, which already leads the Green500 list for energy efficiency491418. Examples include:

JEDI (Germany)

JUPITER (Germany)

Alps (Switzerland)

Isambard-AI (UK)

Helios (Poland)

EXA1-HE (France)

These systems use Grace Hopper chips, which are a step toward the 25x efficiency, with Blackwell expected to push this further in upcoming deployments.

India: Ambani and Reliance Jio Partnership

At the Nvidia AI Summit in Mumbai, Jensen Huang and Reliance Industries Chairman Mukesh Ambani announced a partnership to build large-scale AI infrastructure in India using Nvidia’s latest technology1519. Ambani specifically referenced waiting for Nvidia’s GB200 (Grace Blackwell) chips to mature, indicating plans to deploy the most advanced, energy-efficient hardware for India’s AI ambitions.

Does Nvidia Own Any 25x Supercomputers?

Nvidia itself operates several flagship supercomputers, such as the DGX SuperPOD, which is being upgraded to use the new Grace Blackwell (GB200) Superchips6. These internal systems are used for Nvidia’s own AI research, development, and cloud offerings. Nvidia also provides reference architectures and partners with cloud providers (e.g., Microsoft, Amazon, Google, Oracle) to deploy 25x-efficient Blackwell-based clusters in their data centers611.

Will India (Ambani) or Japan (SoftBank) Build 25x Supercomputers?

India (Ambani/Reliance Jio): Yes, plans are underway to build massive, scalable AI infrastructure in India using Nvidia’s GB200 chips, aiming for the highest efficiency and performance19.

Japan (SoftBank): Yes, SoftBank is building a Blackwell-based supercomputer in partnership with Nvidia, set to be the most powerful in Japan and among the most energy-efficient globally51015.

Summary Table: Major 25x-Efficiency Supercomputer Projects

| Country/Entity | Project/Partner(s) | Nvidia Tech Used | Status/Notes |

|---|---|---|---|

| USA (xAI/Musk) | Colossus (Memphis) | H100 → Blackwell/GB200 | Largest GPU cluster; upgrades to 25x tech planned |

| Taiwan | Foxconn, TSMC, Govt | Blackwell (10,000 GPUs) | First national AI supercomputer |

| Japan | SoftBank | Blackwell (DGX B200) | Most powerful in Japan |

| India | Reliance Jio (Ambani) | GB200 | Announced, scaling up with green power |

| Europe (various) | JEDI, JUPITER, Alps, etc. | Grace Hopper/GB200 | Leading Green500, more Blackwell upgrades coming |

| Nvidia (internal) | DGX SuperPOD | GB200 | Nvidia’s own R&D and cloud systems |

Conclusion

Musk’s Colossus is among the first and largest of the new breed but is not the only or necessarily the very first 25x supercomputer.

Multiple 25x energy-efficient supercomputers are being built worldwide, with Taiwan, Japan, and India all launching national-scale projects in partnership with Nvidia.

Nvidia operates its own 25x-class supercomputers and provides technology to cloud and research partners globally.

India (Ambani) and Japan (SoftBank) are both committed to building such systems, following high-profile summits with Jensen Huang in late 2024.

Current evidence suggests the U.S. government has not yet made a definitive commitment to build a supercomputer that specifically targets the 25x energy efficiency milestone Jensen Huang described, and this indecision is influenced by both political and budgetary factors.

Recent changes in administration have led to significant uncertainty and delays in federal science funding, especially for large-scale computing projects. For example, the National Science Foundation (NSF) faced major proposed budget cuts under President Trump, including the removal of $234 million allocated for infrastructure projects such as a new supercomputer at the University of Texas37. The construction of the Horizon supercomputer, which is expected to be a major leap forward, is now delayed due to disputes between Congress and the White House over what constitutes "emergency spending," with President Trump blocking funds already approved by Congress7.

While the Department of Energy (DOE) continues to announce new supercomputers, such as the Doudna system at Lawrence Berkeley National Laboratory, these projects use next-generation Nvidia chips and are focused on AI and high-performance computing4. However, there is no explicit public statement that Doudna or other DOE projects are designed to achieve the 25x energy efficiency benchmark specifically cited by Nvidia.

Separately, the DOE has issued a request for proposals for the Discovery supercomputer, which aims to be three to five times faster than the current world leader, Frontier, and will focus on advanced AI and energy efficiency8. Yet, this project also does not explicitly mention the 25x energy efficiency target, instead prioritizing overall computational throughput and scientific impact.

In summary:

The U.S. government is continuing to invest in supercomputing, but recent administrative changes and budget disputes have delayed or threatened some major projects137.

No federal project has been publicly confirmed to target the 25x energy efficiency standard set by Nvidia, though new systems will likely be more efficient than previous generations48.

Private sector initiatives, such as OpenAI and Microsoft’s Stargate project, have received high-profile political support and may incorporate Nvidia’s most advanced technology, but these are not strictly government-owned256.

Thus, the U.S. government’s decision to build a 25x energy-efficient supercomputer remains unsettled, with current efforts either delayed, under review, or focused on broader performance and AI capabilities rather than the specific efficiency benchmark.

The convergence of Nvidia's 25x energy-efficient supercomputing architectures (e.g., Blackwell/GB200) and deep learning advancements is accelerating biotech innovation, though access varies by company strategy and partnerships. Here's how this ecosystem operates:

Access to 25x Supercomputers: Company-Specific Approaches

Recursion Pharmaceuticals

Owned Infrastructure: Built BioHive-2, a 504× H100 GPU cluster (Nvidia DGX SuperPOD), delivering 2 exaflops of AI performance34. While not yet on Blackwell, Recursion plans to adopt GB200 chips for future upgrades to achieve 25x efficiency5.

Use Case: Trains foundation models (e.g., Phenom-Beta) on 3.5 billion cellular images for drug target discovery45.

Isomorphic Labs (DeepMind Spin-Off)

Cloud-Based Access: Relies on Google Cloud’s AI Hypercomputer, combining TPUs and GPUs orchestrated via Kubernetes2. This setup sped up inference workflows by 50% and scales dynamically for tasks like molecular simulations.

Partnerships: Collaborates with Eli Lilly and Novartis, leveraging AlphaFold-derived models for small-molecule therapeutic discovery8.

Nvidia’s Silicon Valley Partners

Direct Hardware Provisioning: Nvidia operates its own DGX SuperPODs (e.g., Grace Blackwell GB200 systems) for R&D and provides reference architectures to partners like Microsoft Azure and Oracle Cloud5.

Pharma Collaborations: Examples include Novo Nordisk’s DGX GH200 deployment for diabetes drug discovery6 and BioNTech’s Kyber cluster (224× H100 GPUs)5.

Convergence of 25x Supercomputing and Deep Learning in Biotech

Key Drivers

Scale Demands: Training billion-parameter models on multi-omics data (genomics, proteomics) requires exaflop-scale compute. For example:

Energy Efficiency: Blackwell’s 25x efficiency enables sustainable scaling. BioHive-2 uses ~5 MW of power; a Blackwell upgrade could reduce this to ~0.2 MW for equivalent performance5.

Impact on Workflows

Generative AI for Drug Design

Virtual Screening at Scale

Recursion screened 36 billion compounds in 30 days using H100 GPUs5. Blackwell’s throughput could enable ~900 billion compounds/month.

Multi-Modal Foundation Models

Regional vs. Proprietary Access

| Company/Initiative | Compute Strategy | 25x Tech Adoption Status |

|---|---|---|

| Recursion | Owned DGX SuperPOD (H100 → GB200 planned) | Partial (H100); Blackwell pending |

| Isomorphic Labs | Google Cloud TPU/GPU hybrid | Indirect via cloud upgrades |

| Nvidia Partners | Co-developed clusters (e.g., Novo Nordisk) | Early Blackwell deployments (2025) |

| Academic/Government | Shared resources (e.g., Alps, JUPITER) | Grace Hopper → Blackwell roadmap |

Future Trajectory

2025–2026: Broad adoption of Blackwell/GB200 in pharma, enabling 100+ billion-parameter models for:

Personalized medicine: Patient-specific cancer vaccine design.

Climate-resilient crops: AI-driven genomic editing.

Energy vs. Performance: 25x efficiency will democratize access to smaller biotechs via cloud providers (e.g., NVIDIA DGX Cloud).

In summary, while leading biotechs and techbio firms are early adopters of 25x systems, access is mediated through owned infrastructure, cloud partnerships, or collaborative projects. The synergy between compute efficiency and deep learning is unlocking unprecedented scale in drug discovery and biological simulation.

Estimated Cost of Building a 25x Energy-Efficient Supercomputer Like Colossus

Current Cost Estimates:

Elon Musk’s xAI Colossus project in Memphis, targeting 200,000 Nvidia GPUs (with plans for up to 1 million), is estimated to cost at least $400 million for construction and infrastructure, with hardware costs alone projected at $4.3 billion for 200,000 GPUs and up to $27 billion for 1 million GPUs35.

The latest Nvidia Blackwell GB200 Superchips, which underpin the 25x energy efficiency claim, are estimated to cost $60,000–$70,000 per chip. Fully equipped server racks (NVL72) can reach $3 million each2.

The total cost for a supercomputer on the scale of Colossus (hundreds of thousands of top-end GPUs, supporting infrastructure, power, and cooling) is therefore in the multi-billion-dollar range—potentially $5–$10 billion or more for a next-generation, 25x-efficient system at the largest scale35.

Comparison to Previous Generations:

Fugaku (Japan, 2020): $1.2 billion4

Tianhe-2 (China, 2013): $390 million4

Sierra (US, 2018): $325 million4

IBM Sequoia (US, 2012): $250 million4

Blue Waters (US, 2013): $208 million4

Summit (US, 2018): ~$200 million (not in provided results, but widely reported)

El Capitan (US, 2025): Not specified, but less than Colossus in compute and likely in cost8

Inflation and Technology Effects:

While the cost per unit of compute (petaflops or exaflops) has dropped dramatically over the last decade, the absolute cost of the largest AI supercomputers has risen sharply due to the exponential increase in scale, demand, and the premium for cutting-edge AI chips57.

Power and infrastructure costs have also soared, as these systems now require as much electricity as a mid-sized city5.

Conclusion:

Building a 25x energy-efficient supercomputer like Colossus today costs significantly more in absolute terms than previous government or academic supercomputers, even after adjusting for inflation.

The main drivers are the massive scale (hundreds of thousands to millions of GPUs), higher chip prices for the latest Nvidia Blackwell architecture, and the need for advanced power and cooling infrastructure235.

However, the cost per unit of compute and per watt of energy is much lower, reflecting the dramatic gains in efficiency and performance17.

Summary Table: Cost Comparison

| System | Year | Estimated Cost (USD) | Technology | Scale/Notes |

|---|---|---|---|---|

| Colossus (xAI) | 2025 | $4–$27 billion | Nvidia H100/GB200 | Up to 1 million GPUs |

| Fugaku | 2020 | $1.2 billion | ARM CPUs | Fastest at the time |

| Tianhe-2 | 2013 | $390 million | Custom CPUs/Accelerators | Top system in 2013 |

| Sierra | 2018 | $325 million | IBM/Nvidia | US nuclear simulation |

| Sequoia | 2012 | $250 million | IBM | US nuclear simulation |

Bottom line:

A next-generation, 25x energy-efficient supercomputer like Colossus is vastly more expensive than prior generations in total cost, even after inflation, but delivers exponentially more compute and efficiency per dollar and per watt2345.

Japan’s supercomputer Fugaku, which cost between $1–2 billion (not $4.2 billion) to develop and deploy, is one of the most ambitious and successful government supercomputing projects of the past decade10134. Here’s a detailed look at its applications and reputation in light of new AI-centric Nvidia-powered supercomputers:

What Has Fugaku Been Used For?

Fugaku was designed as a general-purpose, national-scale research platform, with a focus on societal and scientific challenges. Key applications include:

COVID-19 Research: Fugaku was rapidly deployed for pandemic response, simulating virus droplet transmission in indoor environments, which informed public health guidelines on mask use and ventilation. It also screened thousands of existing drugs for COVID-19 treatment, compressing what would have taken a year on older systems into just 10 days710.

Drug Discovery and Biomedicine: Fugaku has been used for large-scale molecular simulations, protein folding, and drug-receptor binding studies, accelerating pharmaceutical innovation37.

Climate and Disaster Modeling: Fugaku supports real-time tsunami prediction, large-scale weather forecasting, and earthquake simulations, helping Japan prepare for natural disasters28.

Materials Science and Energy: The system is used to search for new materials for solar cells and hydrogen production, as well as to model next-generation batteries and catalysts8.

AI and Machine Learning: Fugaku’s architecture, while CPU-based, is used for advanced AI research, including neural network training and quantum-classical hybrid computing in partnership with the Reimei quantum processor3.

Fundamental Science: It supports research in physics, including lattice QCD simulations and astrophysics1213.

Is Fugaku Now Seen as a Relative Failure?

No, Fugaku is not regarded as a failure. Despite the emergence of Nvidia GPU-based, AI-optimized supercomputers, Fugaku is widely viewed as a landmark achievement for several reasons:

Versatility and Societal Impact: Fugaku was intentionally designed for broad scientific and industrial use, not just AI. Its rapid deployment for COVID-19 research and disaster modeling has had tangible public benefits7213.

Technical Milestones: It was the world’s fastest supercomputer from 2020–2022, the first ARM-based system to reach the top ranking, and the only system to simultaneously top four major global benchmarks (LINPACK, Graph500, HPCG, HPL-AI)456.

Hybrid Computing Leadership: Fugaku is now part of a hybrid quantum-classical system (with Reimei), keeping Japan at the forefront of next-generation computing paradigms3.

Strategic Value: Fugaku has strengthened Japan’s scientific infrastructure, semiconductor ecosystem, and national resilience14.

While Nvidia’s Blackwell/GB200-powered systems offer far superior AI training efficiency and raw throughput, Fugaku’s design priorities were different: it emphasized CPU versatility, energy efficiency for a wide range of applications, and national self-reliance in computing hardware113. Japan is already planning “Fugaku Next,” a zetta-scale system that will target AI workloads more directly9.

Summary Table: Fugaku’s Impact and Legacy

| Aspect | Fugaku (Japan, 2020) | Nvidia Blackwell/GB200 AI Supercomputers |

|---|---|---|

| Cost | $1–2 billion | $5–10+ billion (largest projects) |

| Architecture | ARM A64FX CPUs | Nvidia GPUs (H100, Blackwell/GB200) |

| Main Use Cases | Science, health, disaster, AI | AI training, generative models |

| Societal Impact | High (COVID, disaster, energy) | Emerging |

| Reputation | Landmark success | New standard for AI |

In conclusion:

Fugaku is not seen as a failure; it is a globally respected, multi-purpose supercomputer that has delivered on its national and scientific goals. The rise of Nvidia-powered AI supercomputers reflects a shift in global priorities toward AI, but does not diminish Fugaku’s achievements or relevance. Japan is already investing in next-generation systems to stay competitive in the AI era914.

Yes, Taiwan has announced broad application areas for its new 25x energy-efficient supercomputer, emphasizing both national priorities and industry innovation. The main initial application domains include:

Sovereign AI and Language Models:

The supercomputer will be used to develop and deploy large language models tailored to Taiwan’s unique linguistic and cultural context. Projects like Taiwan AI RAP and the TAIDE initiative aim to create trustworthy, locally relevant AI for applications such as intelligent customer service, translation, and educational tools. These efforts are designed to empower local startups, researchers, and enterprises with advanced generative AI capabilities1.Scientific Research and Quantum Computing:

The system will support advanced scientific computation across disciplines, including quantum computing research, climate science, and broader AI development. Researchers from academic institutions, government agencies, and small businesses will have access to accelerate innovative projects1.Smart Cities, Electric Vehicles, and Manufacturing:

Foxconn will leverage the supercomputer to drive automation and efficiency in smart cities, electric vehicles, and manufacturing. This includes optimizing transportation systems, developing advanced driver-assistance and safety systems, and enabling digital twin technologies for smarter urban infrastructure and streamlined manufacturing processes235.Healthcare and Biotech:

The supercomputer is expected to power breakthroughs in cancer research and other health-related AI applications, supporting Taiwan’s ambitions in biotech and medical innovation5.Industry and Economic Development:

The supercomputer is positioned as a catalyst for Taiwan’s AI industry, fostering cross-domain collaboration and supporting the growth of local businesses and the broader tech ecosystem1234.

Summary:

Taiwan’s new supercomputer will initially focus on sovereign AI (especially language models), advanced scientific research (including quantum computing), smart cities, electric vehicles, manufacturing, and healthcare. The system is intended to empower researchers, startups, and industry, with a strong emphasis on supporting Taiwan’s technological autonomy and global AI leadership12345.

It is broadly correct to assume that the main uses of Colossus are, first, closely tied to Elon Musk’s major business interests, but, second, the system is also positioned to support a wide range of advanced AI applications—including many similar to those announced for Taiwan’s new supercomputer. Here’s how the uses compare and where Musk’s approach may differ:

Primary Uses: Musk’s Businesses

xAI and Grok:

Colossus’s main function is to train xAI’s large language models (LLMs), especially the Grok family, which powers features for X (formerly Twitter) and aims to compete with OpenAI’s GPT series12578.X (formerly Twitter):

The supercomputer supports AI-driven features and moderation for the social media platform15.SpaceX:

Colossus is used to support AI operations for SpaceX, potentially including satellite communications, mission planning, and autonomous systems1.Tesla (indirectly):

While Tesla’s main AI training happens on its own Cortex and Dojo supercomputers, Colossus’s advances in general AI and multimodal models may eventually inform Tesla’s self-driving and robotics efforts56.

Broader AI and Scientific Applications

Musk and xAI have stated ambitions that go beyond his core companies:

Artificial General Intelligence (AGI):

Colossus is explicitly designed to push toward AGI—AI that can reason, learn, and act across domains, not just in narrow tasks367. This means supporting research in reasoning, multimodal learning (text, vision, speech), and even robotics.Scientific Discovery:

xAI’s mission includes using AI to accelerate scientific discovery, such as new materials, energy solutions, and potentially drug discovery—areas similar to those prioritized by Taiwan’s supercomputer7.Autonomous Machines and Robotics:

Plans include training models for autonomous systems, which could impact robotics, manufacturing, and smart infrastructure—again, paralleling some of Taiwan’s focus areas7.

Comparison: Colossus vs. Taiwan’s 25x Supercomputer

| Application Area | Colossus (Musk/xAI) | Taiwan 25x Supercomputer |

|---|---|---|

| Social Media/LLMs | Yes (Grok, X) | Yes (sovereign language models) |

| Autonomous Vehicles/Robotics | Yes (Tesla, SpaceX, robotics) | Yes (smart cities, manufacturing, EVs) |

| Scientific Research | Yes (materials, energy, science) | Yes (quantum computing, health, biotech) |

| Healthcare/Biotech | Potential/future | Yes (cancer research, biotech) |

| National Sovereignty/Industry | Private, but with global ambitions | National/industry empowerment focus |

| AGI Research | Yes (explicitly stated) | Not explicit, but supports advanced AI |

Anything Completely Different?

AGI Ambition:

Musk’s explicit pursuit of AGI—AI that could match or exceed human intelligence across domains—is a more radical and open-ended goal than most government or national supercomputing projects, which tend to focus on specific sectors or national priorities367.Integration with Tesla Energy:

Colossus is paired with the world’s largest Tesla Megapack battery installation, making it a pioneering experiment in sustainable, large-scale AI infrastructure36. This energy strategy is unique among major supercomputing projects.Private, Rapid Development:

Colossus is a privately owned, rapidly expanding system, not a national or academic resource. Its uses are guided by Musk’s business and technological vision, rather than by national policy or broad academic access57.

In summary:

Colossus’s primary uses are closely linked to Musk’s businesses (xAI, X, SpaceX, and potentially Tesla), but its architecture and stated mission enable it to support a broad spectrum of AI applications, many of which overlap with the focus areas of Taiwan’s new supercomputer—such as language models, scientific research, and autonomous systems. What sets Colossus apart is its explicit AGI ambition, its integration with Tesla’s energy technology, and its status as a private, rapidly deployed infrastructure for frontier AI research and commercial innovation1235678.

Elon Musk defines AGI (Artificial General Intelligence) as an AI system that is “smarter than the smartest human”—that is, a machine capable of matching or exceeding human intelligence across a broad range of cognitive tasks, not just narrow, specialized domains1356. In interviews and public statements, Musk has repeatedly predicted that AGI could arrive as soon as 2025 or 2026, emphasizing that, by his definition, AGI would be able to perform any intellectual task a human can, and potentially do so better356.

Musk’s vision for AGI includes:

Learning agents capable of reasoning, adapting, and generalizing across domains.

Systems that can drive scientific discovery, including in biotech, healthcare, and materials science—potentially leading and accelerating projects in those fields2.

Integration with robotics (e.g., Tesla’s Optimus robot) and brain-computer interfaces (Neuralink), aiming for AI that can interact with and augment the physical world2.

Digital twinning and large-scale modeling, which are congruent with Jensen Huang’s vision of AI-driven simulation and digital representations of real-world systems.

However, Musk’s definition is much broader and more ambitious than simply building advanced learning agents or digital twins. He envisions AGI as a general-purpose intelligence, not just a set of powerful tools for specific industries135.

Current Reality:

Most experts, including many in the field, agree that AGI as Musk defines it—a system that could autonomously lead biotech projects or match human creativity and reasoning across the board—is not imminent1. While AI is rapidly advancing in learning, simulation, and automation (and can already assist in biotech and digital twin projects), the leap to true AGI remains highly speculative and controversial13.

In summary:

Musk’s definition of AGI is a machine intelligence that can outperform humans at virtually any intellectual task, including leading complex projects like those in biotech. While some near-term visions (learning agents, digital twins) are congruent with Jensen Huang’s and the broader AI industry’s roadmap, Musk’s broader AGI goal is far more ambitious and, according to most experts, still out of reach135.

Elon Musk’s vision for AGI is not just about building AI to augment his own cognitive abilities, but rather about creating an intelligence that can do everything the human brain can do—and more.

AGI as Human-Level and Beyond:

Musk defines AGI as a system that can perform any intellectual task a human can, matching or exceeding human intelligence across all domains3. His goal is not limited to personal enhancement, but to create a machine intelligence that could, in principle, independently reason, solve problems, and innovate at or above the level of any human.Augmentation vs. Replacement:

Through Neuralink, Musk does pursue the idea of augmenting human brains—enabling direct brain-computer interfaces, potentially leading to “AI symbiosis,” where humans and AI work together or even merge capabilities45. This could allow humans to keep pace with rapidly advancing AI, communicate directly with computers, and share information brain-to-brain.AGI Ambition:

However, Musk’s broader AGI projects (such as xAI and his push for “TruthGPT”) are about building an autonomous intelligence that is not just an assistant or augmentation, but a system that could independently perform any cognitive task, including leading complex scientific or biotech projects23. He envisions AGI as a tool that could accelerate discovery, automate research, and potentially make decisions and innovations without direct human oversight.Musk’s Public Statements:

At CES 2025, Musk predicted that AI would soon be able to complete nearly all cognitive tasks that don’t involve physical manipulation, and that within a few years, AI could surpass human intelligence in many domains2. He sees this as both an opportunity (for progress and problem-solving) and a risk (if not properly aligned with human values).

In summary:

Musk’s intent is to build AGI that can do everything his brain can do—and much more—not just to augment his mind, but to create a truly general, autonomous intelligence. While he also pursues human enhancement through Neuralink, his AGI ambitions are aimed at creating a machine intelligence that could independently master any intellectual domain, including those as complex as leading biotech innovation2345.

Yes, Neuralink is already in use, but its current focus is strictly medical and experimental—not general cognitive enhancement or mainstream brain augmentation.

Current Status and Focus

Clinical Trials:

As of mid-2025, Neuralink has implanted its brain-computer interface (BCI) devices in at least five human patients, with plans to expand to 20–30 more this year. These trials are taking place in the U.S., Canada, and other countries1256.Primary Applications:

Restoring Autonomy for Paralysis: The main goal is to help people with severe paralysis (from spinal cord injuries, ALS, etc.) control digital devices—such as computers, smartphones, and even robotic arms—using only their thoughts123467.

Communication: Neuralink enables non-verbal individuals to communicate by controlling a virtual keyboard or mouse with their brain signals34.

Vision Restoration: Neuralink is developing a “Blindsight” implant to restore basic vision to blind individuals by stimulating the visual cortex. This device has received FDA Breakthrough Device Designation and is expected to begin human trials by the end of 202515.

Speech Restoration: The device is also being developed to help restore speech for people with severe impairment due to neurological conditions24.

Prosthetic Control: Ongoing studies aim to allow paralyzed patients to control robotic arms or other prosthetics directly with their thoughts1.

Other Potential Medical Uses:

Neuralink aspires to treat a range of neurological disorders, monitor and possibly treat mental health conditions, and eventually restore or enhance memory and cognitive function, but these are future goals and not part of current human trials3.

Summary Table: Neuralink’s Current Focus

| Application Area | In Use / In Trials? | Details/Status |

|---|---|---|

| Paralysis/Device Control | Yes | Patients control digital devices with thoughts |

| Communication | Yes | Virtual keyboards/mice for non-verbal individuals |

| Vision Restoration | Planned | Blindsight device, FDA breakthrough status |

| Speech Restoration | Planned | FDA breakthrough status for severe speech impairment |

| Prosthetic Control | Early Trials | Robotic arm control by thought |

| Cognitive Enhancement | Not Yet | Long-term aspiration, not current focus |

Key Point

Neuralink’s technology is not currently used for general cognitive augmentation or “boosting” healthy brains. Its present use is focused on restoring lost functions for people with severe disabilities, such as paralysis or blindness. Broader applications like memory enhancement, learning acceleration, or general brain-computer symbiosis remain speculative and are not part of current clinical use12357.